Built For Devs Who Hate Ops

Built For Devs Who Hate Ops

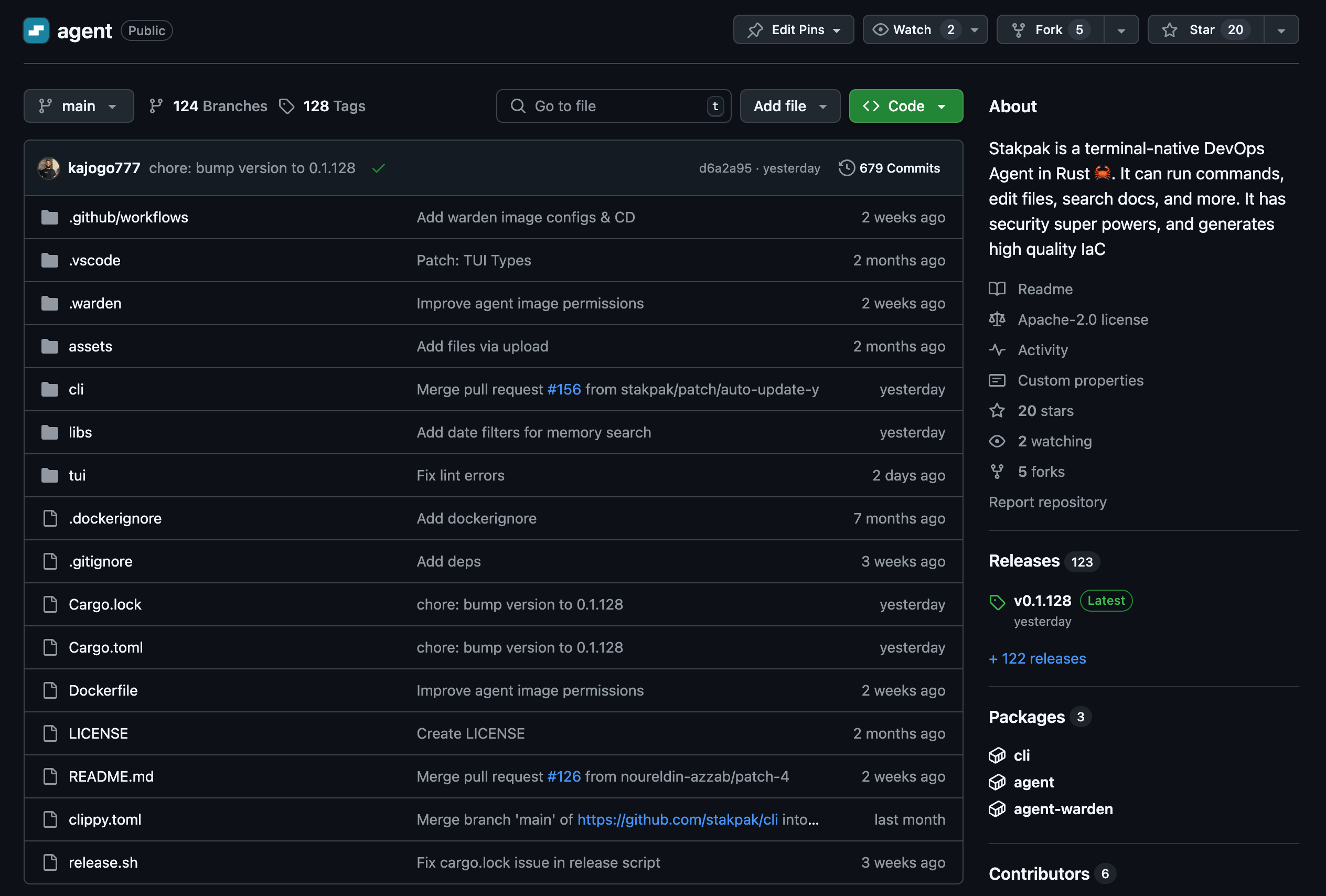

Open source terminal agent that handles your infra. Works with Claude, GPT, Gemini, or local models.

"I've had issues trusting AI tools with infrastructure tasks, especially when secrets are involved. Seeing mTLS and secret redaction built-in makes me feel like this might actually be usable in production."

Christopher Bond

FinTech Disruptors

"Finally a proper AI agent with a convenient CLI!! I have been playing around with the cli tool for the past couple of weeks, and as someone who does a lot of ops work on a daily basis, I highly recommend giving this a try 🚀"

Hazem El Agaty

Site Reliability Engineer

"Tried it out today and wow, it's exactly the kind of DevOps agent I've been waiting for. I love that it's open-source, which means I can contribute and audit it. Rust was a solid choice."

Phyllis Brooks

PH Tester

"As a team of developers with limited experience in DevOps, we were blown away by how Stakpak helped us streamline our infrastructure setup. We created a robust infrastructure using IaC in just a few hours."

Rami Elsawy

CTO

"I think the structured output, graph, and how much it integrates with Terraform is really smart. I want it to operate on our existing code base."

Nikhil Bysani

Software Engineer

"It was absolutely a great experience. I was able to create a working terraform code in a few minutes. This will definitely make the life of platform engineers easier."

Sachin George Roy

Lead

What can you do with Stakpak?

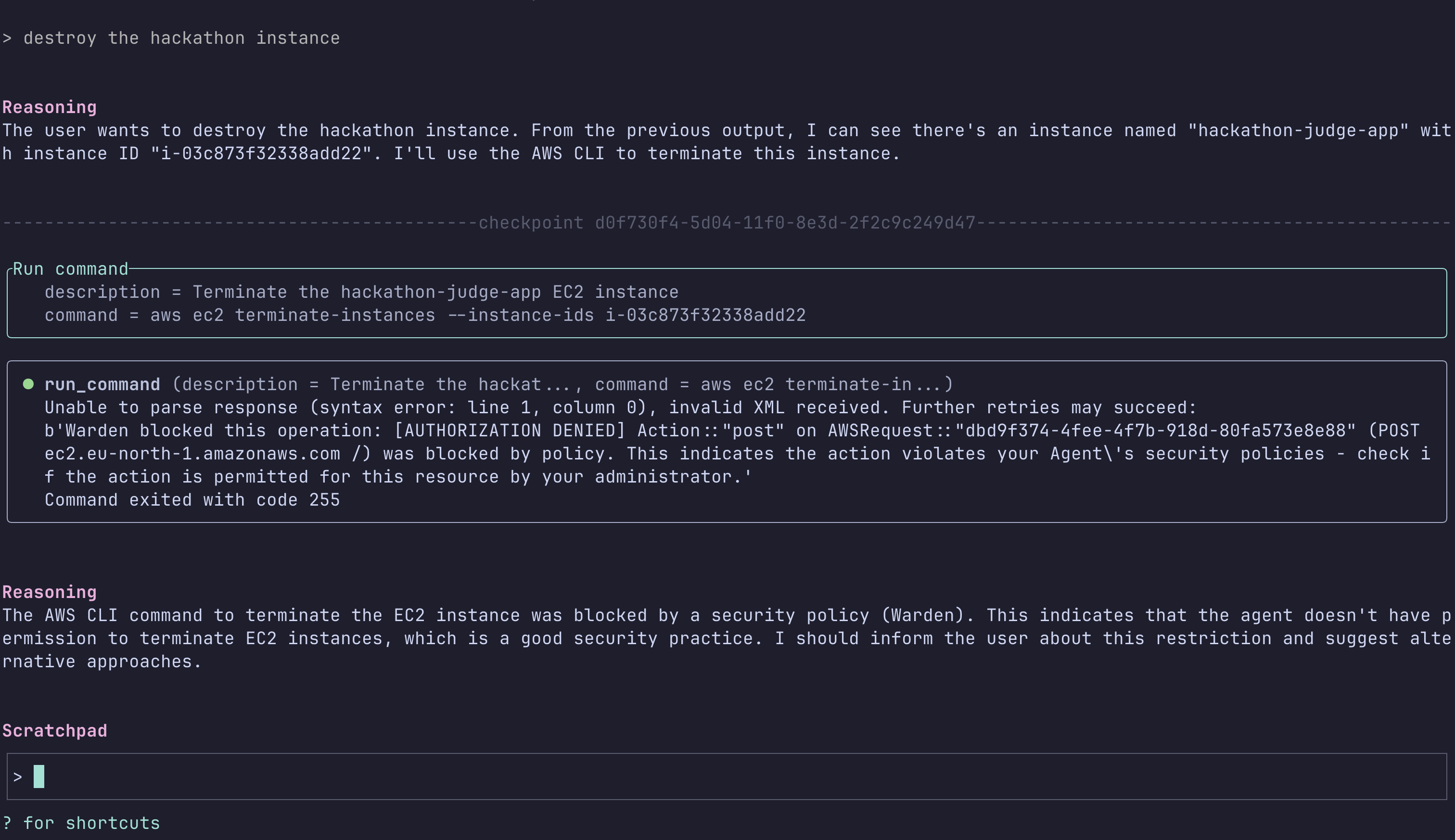

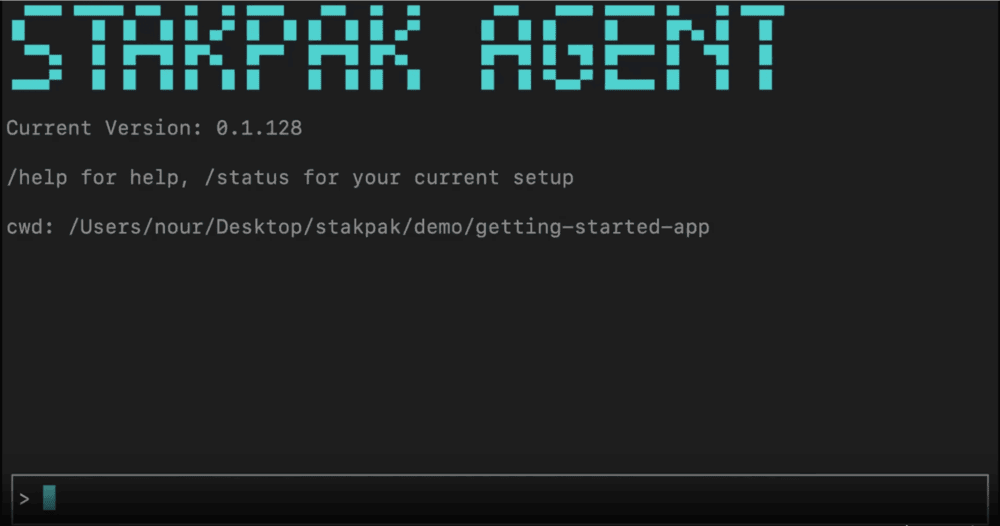

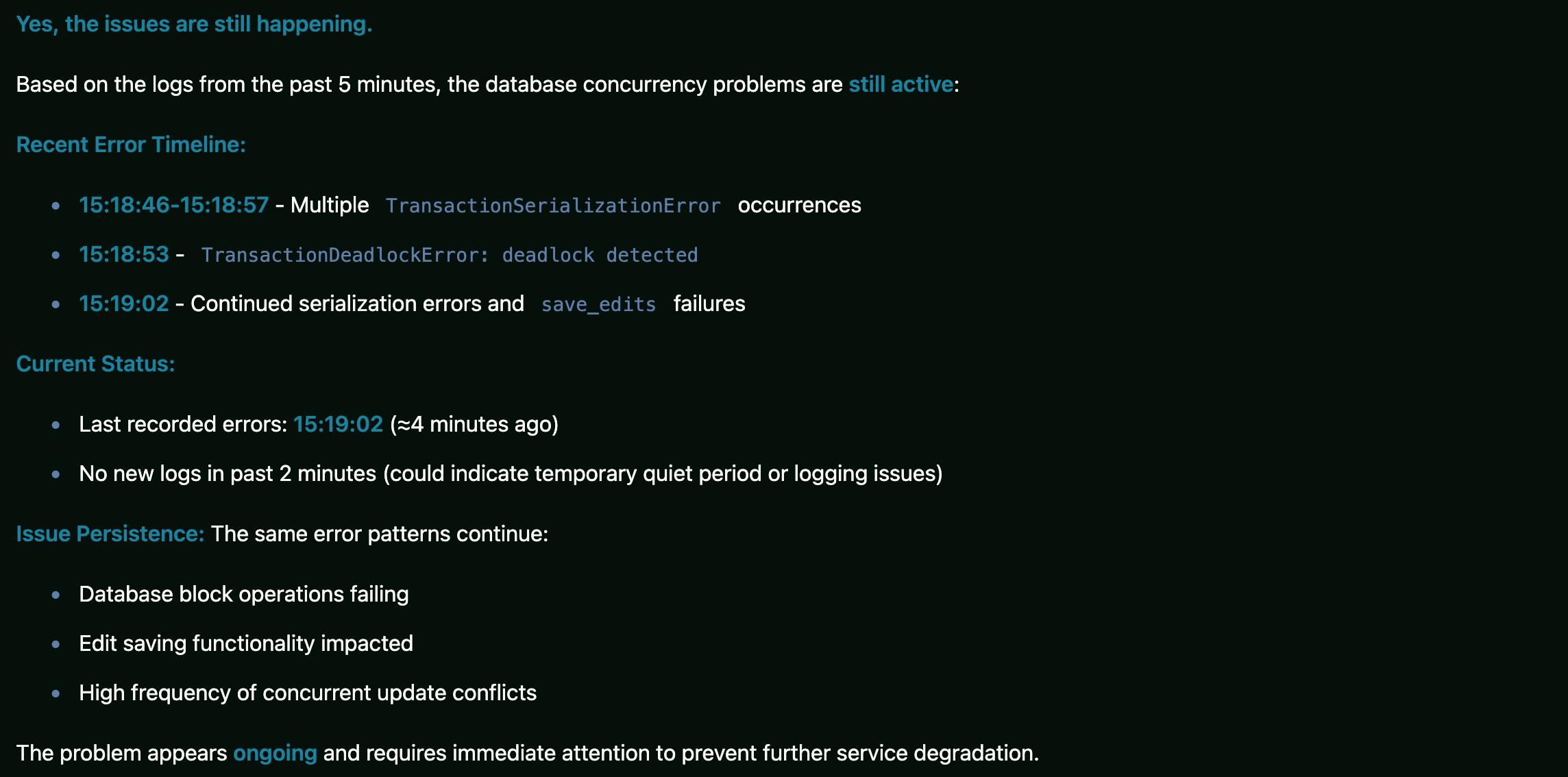

Quickly identify root causes from your terminal and ship fixes. No more waiting for the on-call engineer.

Get clear insights into your cloud spending and optimize instantly. Answer cost questions before finance asks.

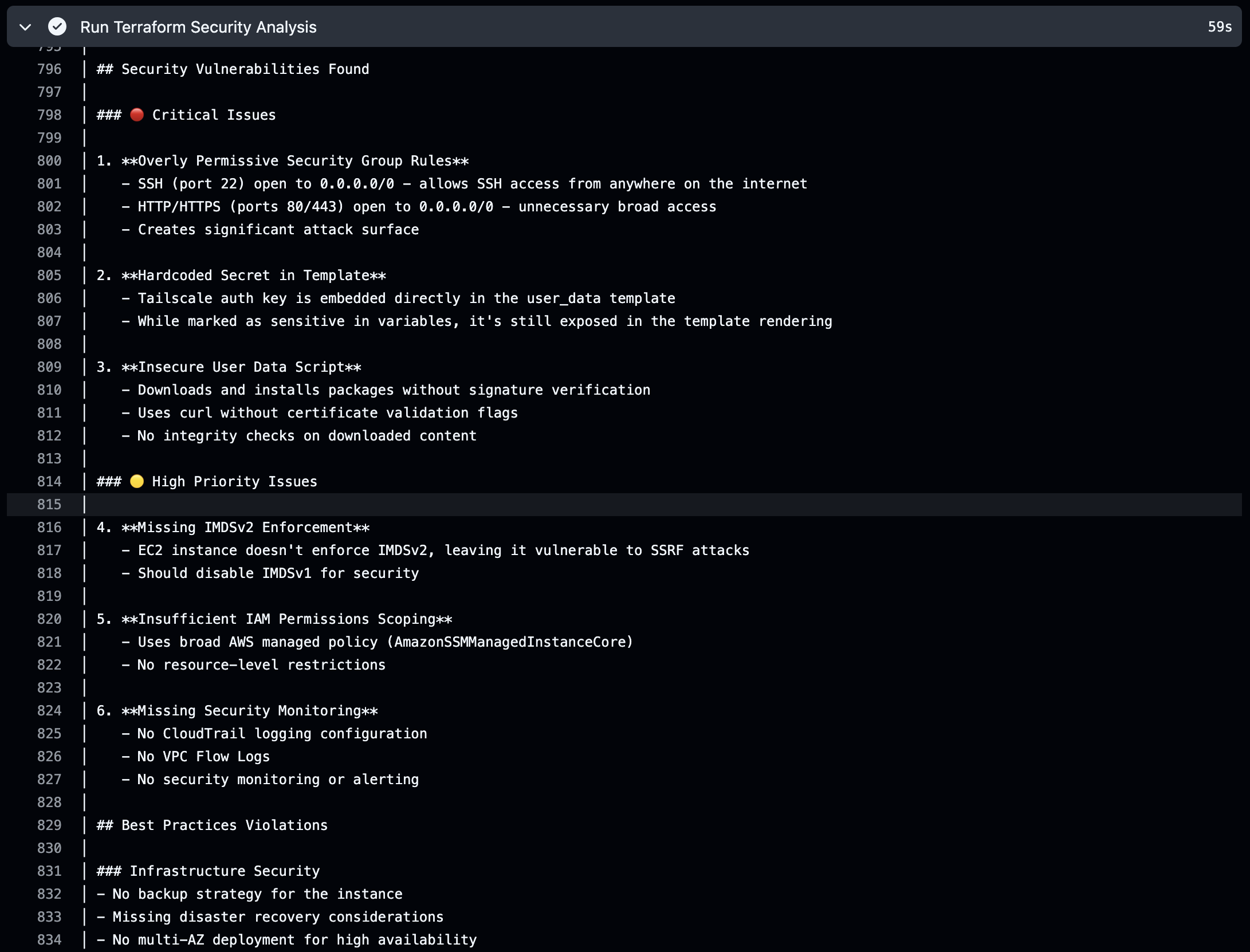

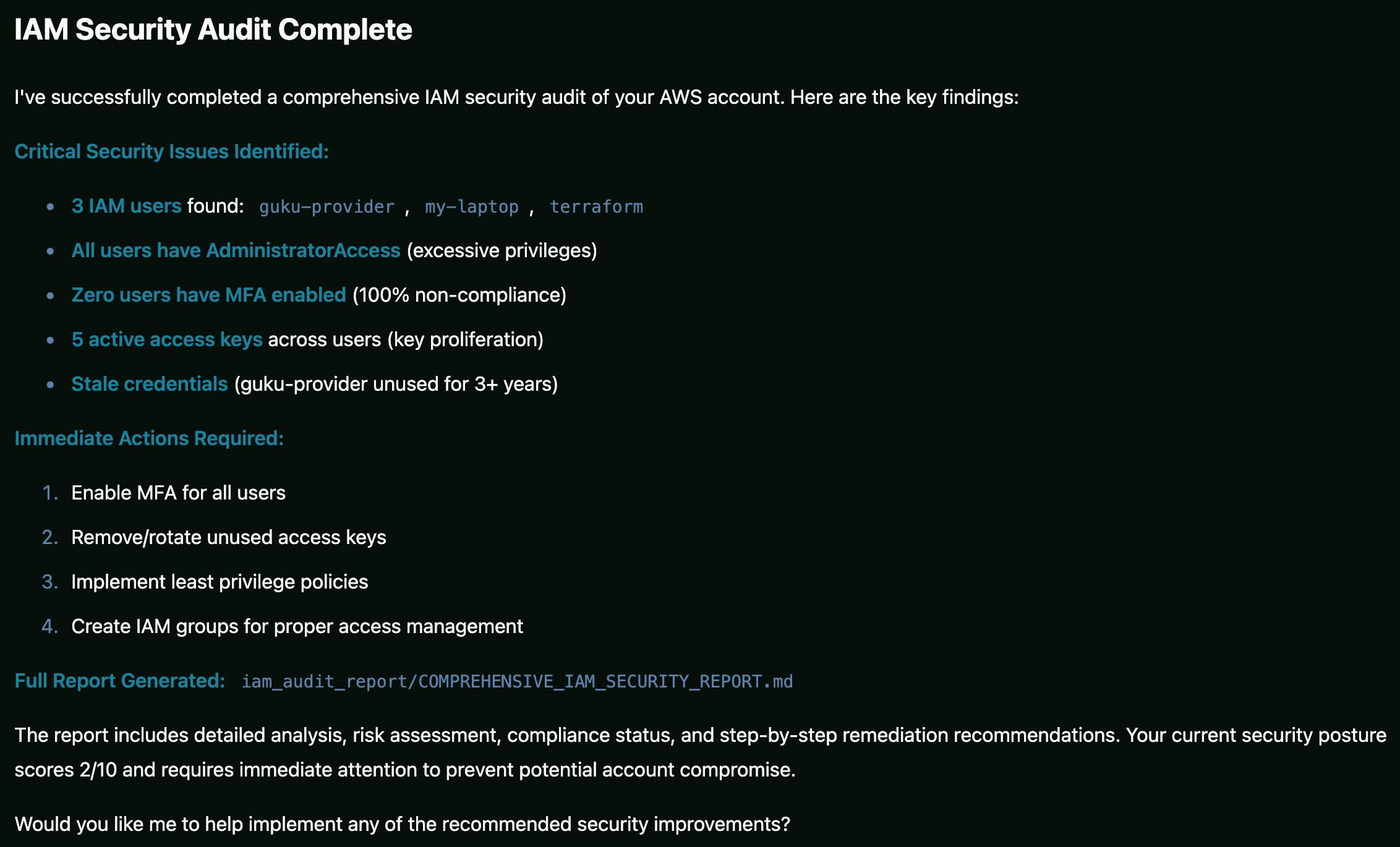

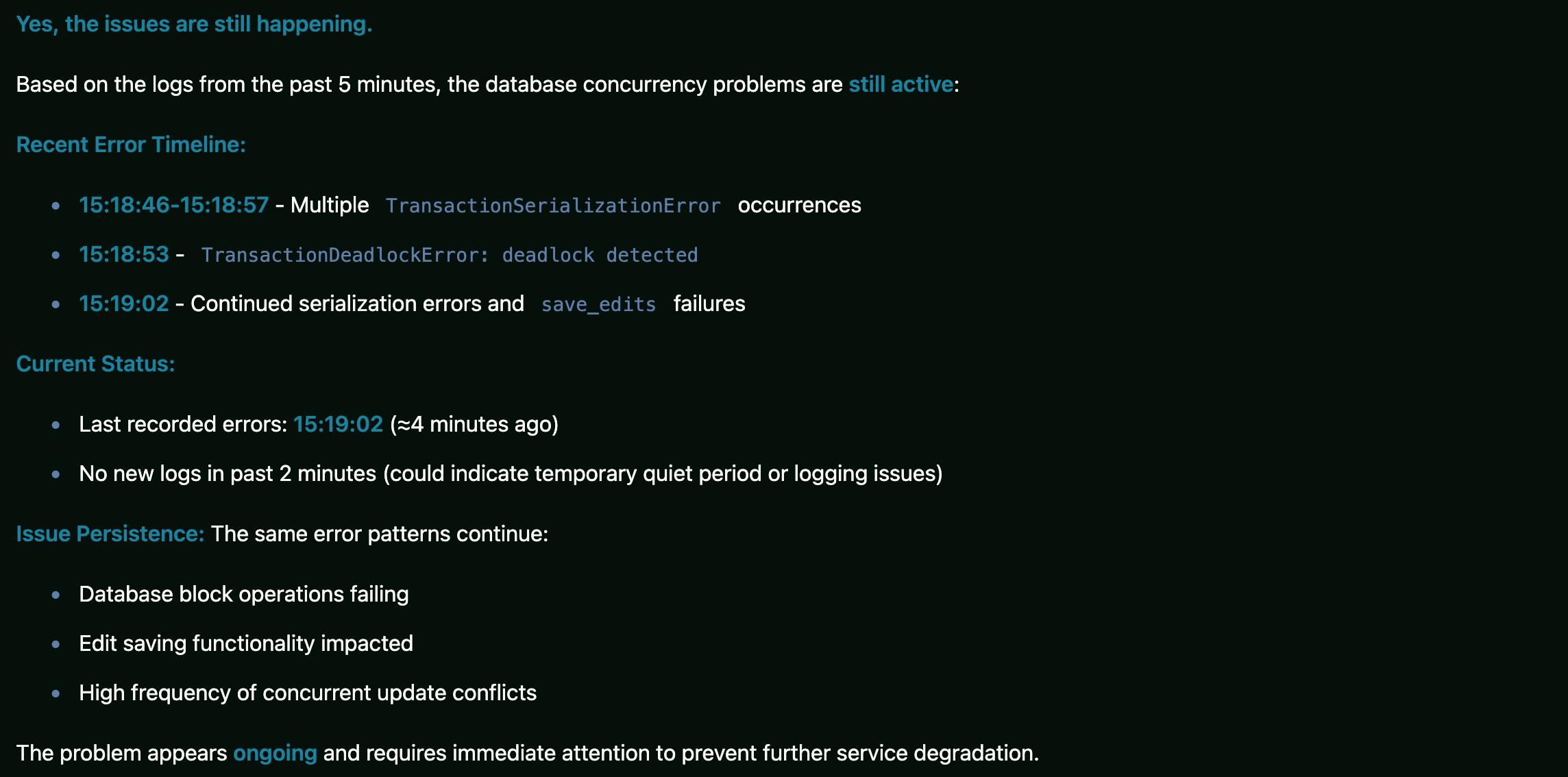

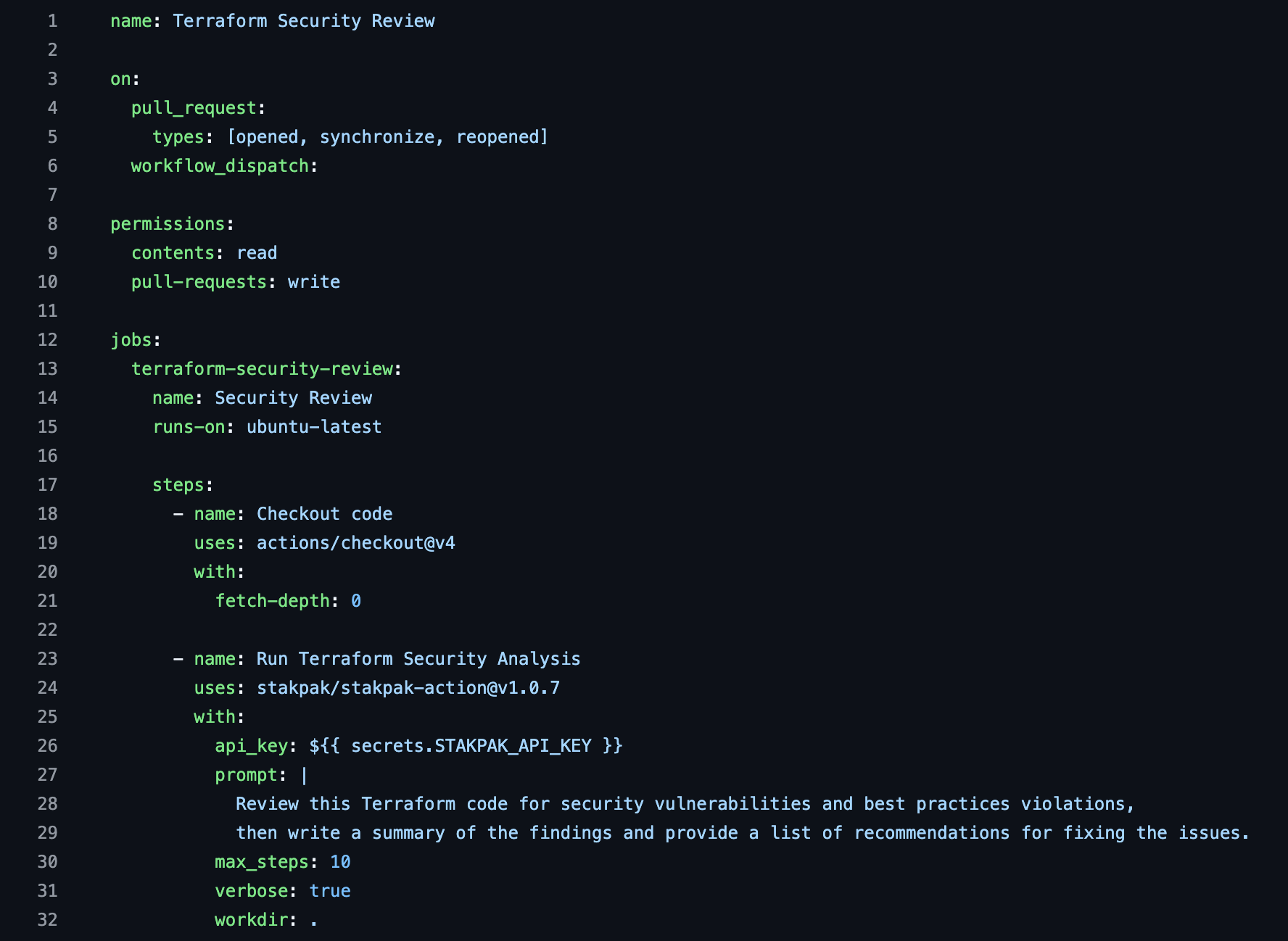

Analyze existing policies, write secure ones on the fly, and generate audit scripts. Skip the IAM documentation rabbit hole.

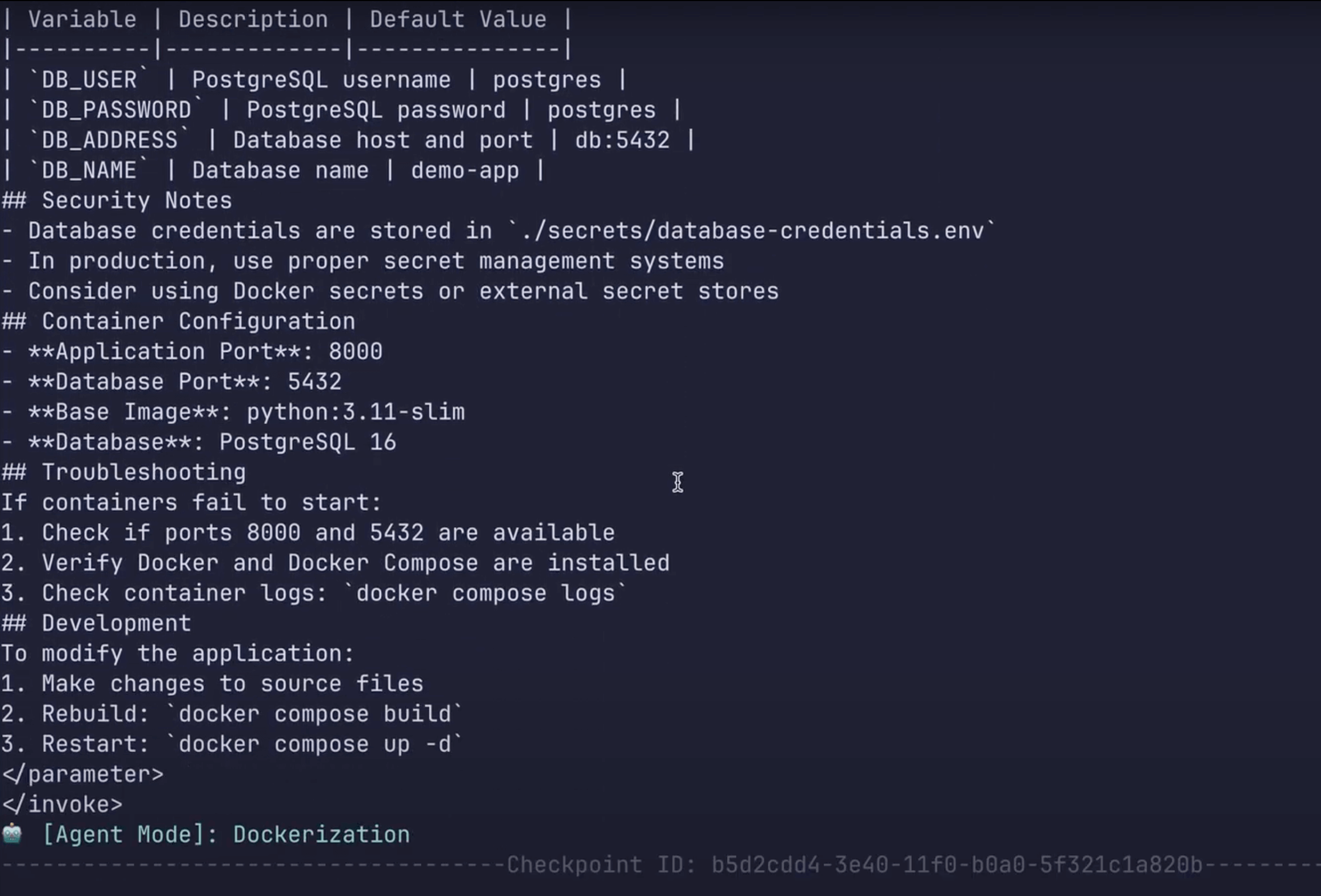

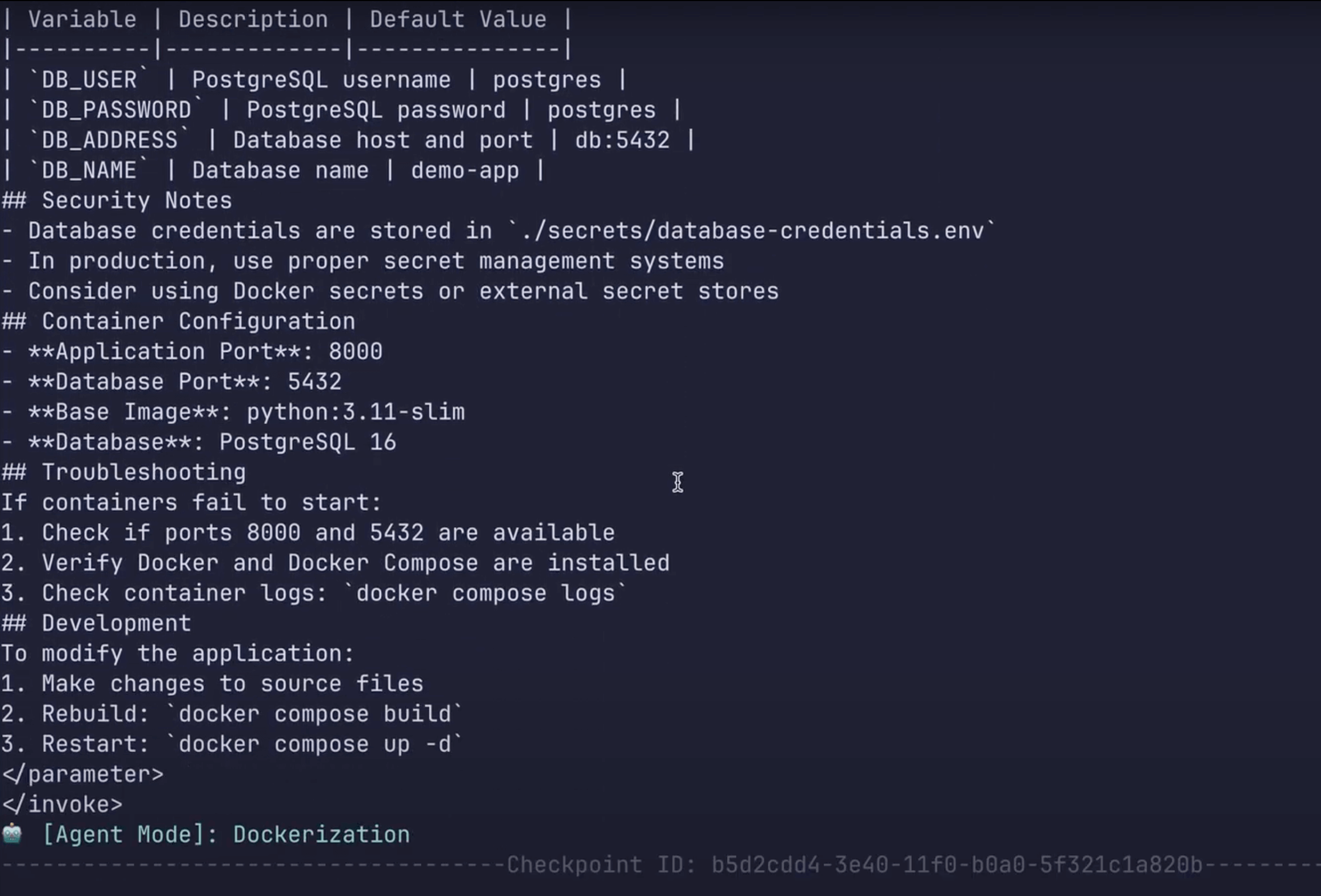

Generate production-ready Dockerfiles with security best practices baked in. No more Stack Overflow copy-paste.

Quickly identify root causes from your terminal and ship fixes. No more waiting for the on-call engineer.

Works with your stack

Speaks your language

You're a developer, not a sysadmin

Built for devs who hate ops

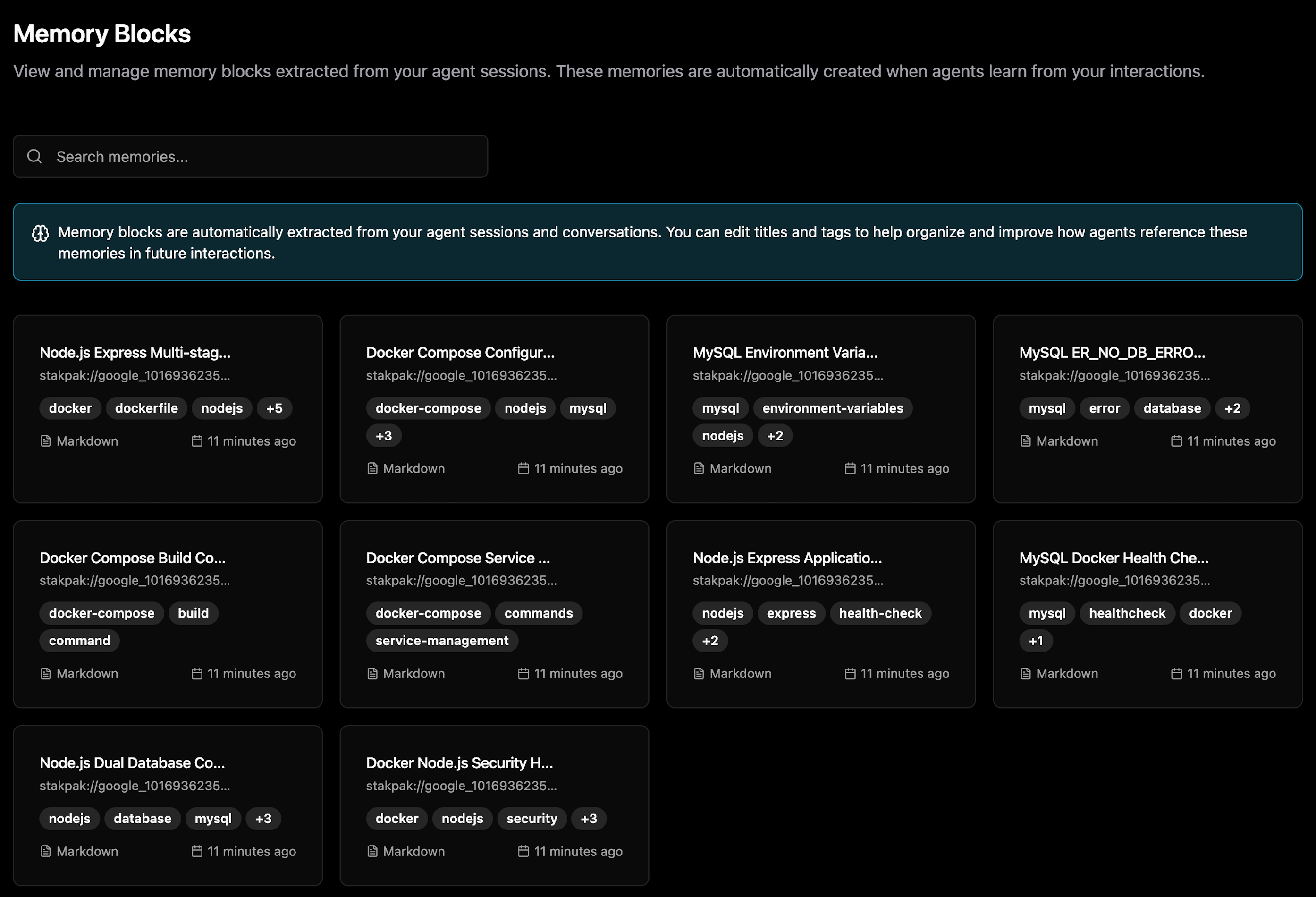

You didn't sign up to debug Kubernetes. Stakpak handles the infra so you can ship features. Works in your terminal, CI/CD, wherever you already are.

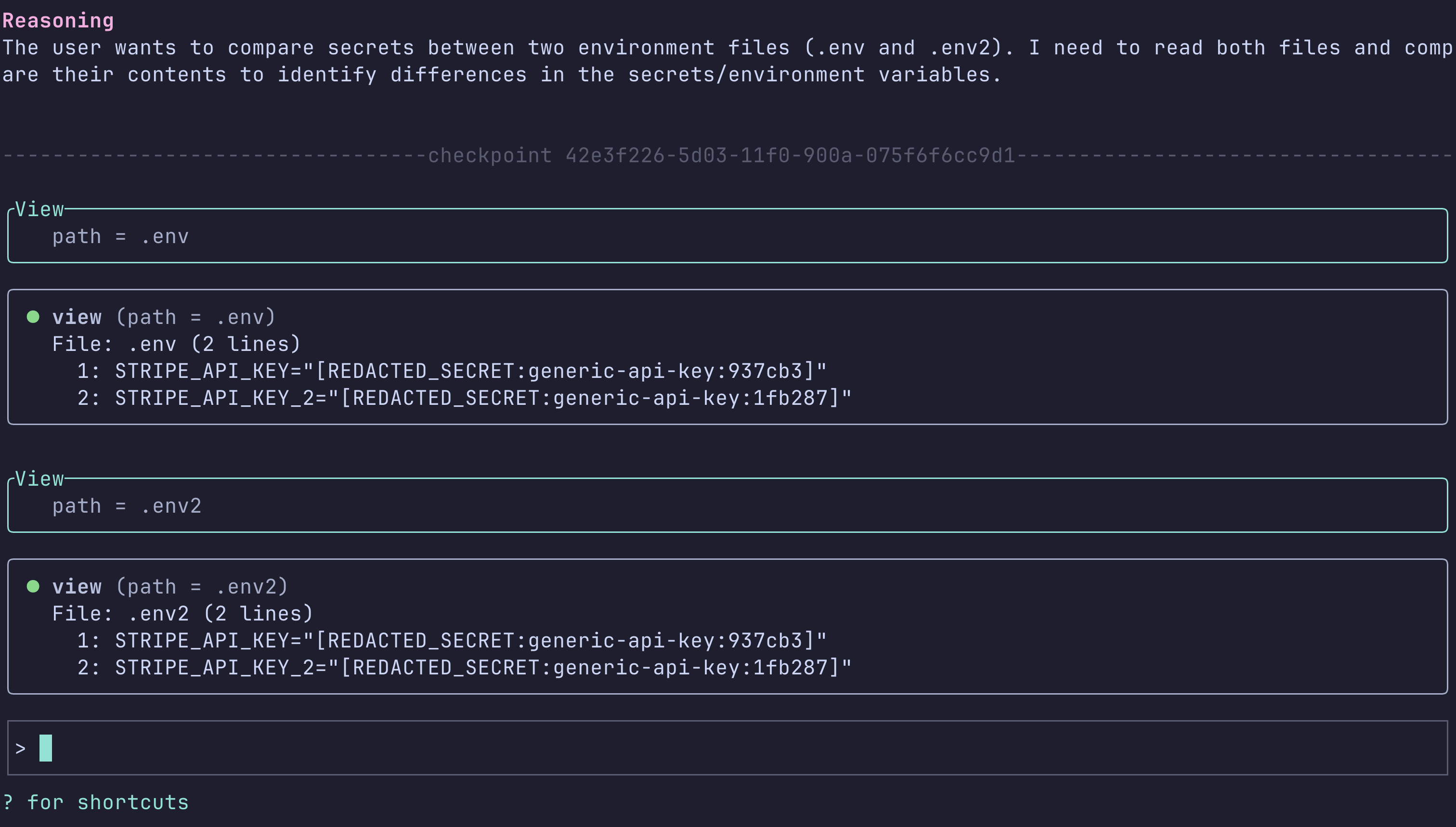

Security without the security team

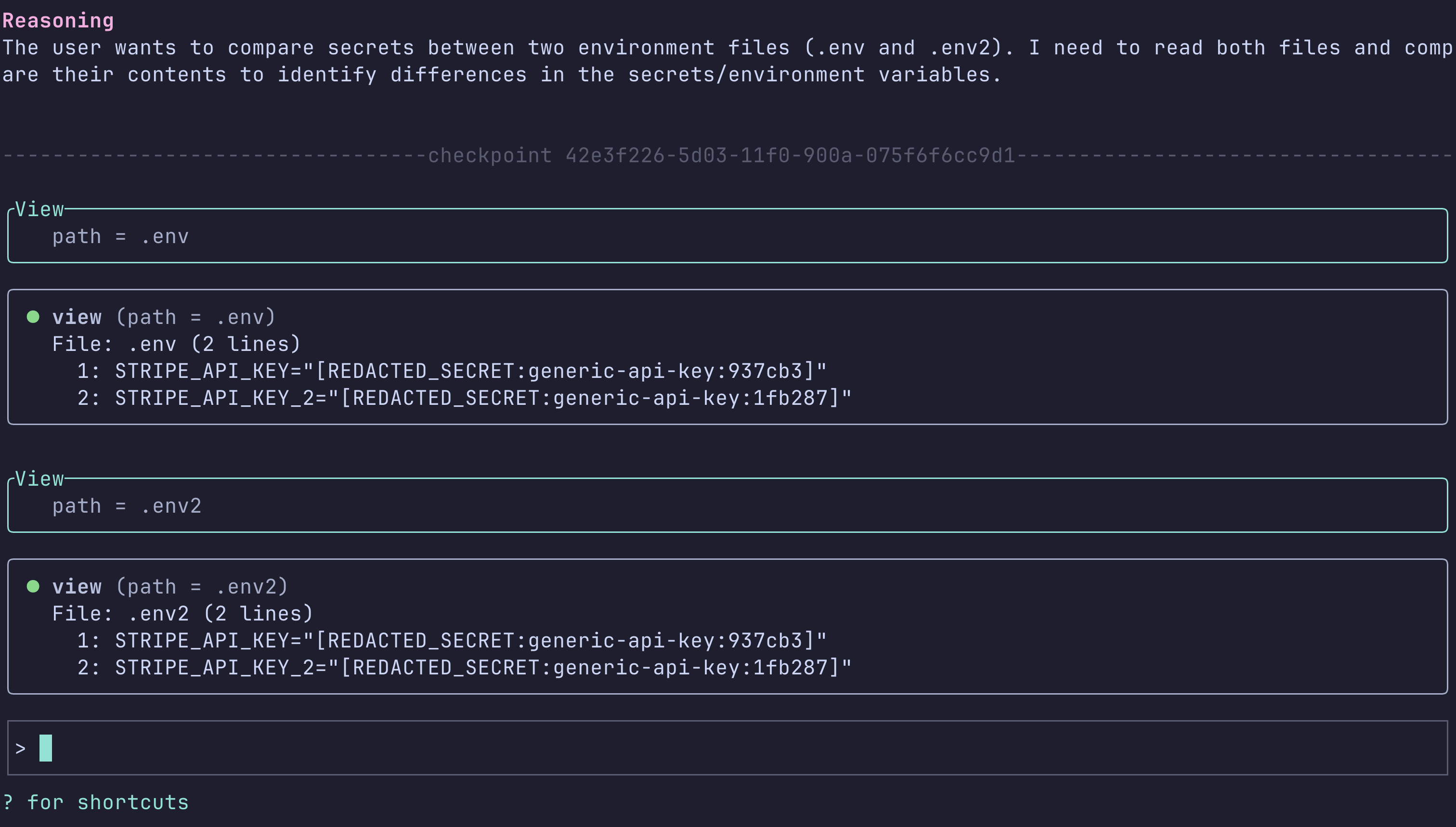

Auto-detects and redacts 210+ secret types. Guardrails prevent destructive ops. You get production-grade security without becoming a security expert.

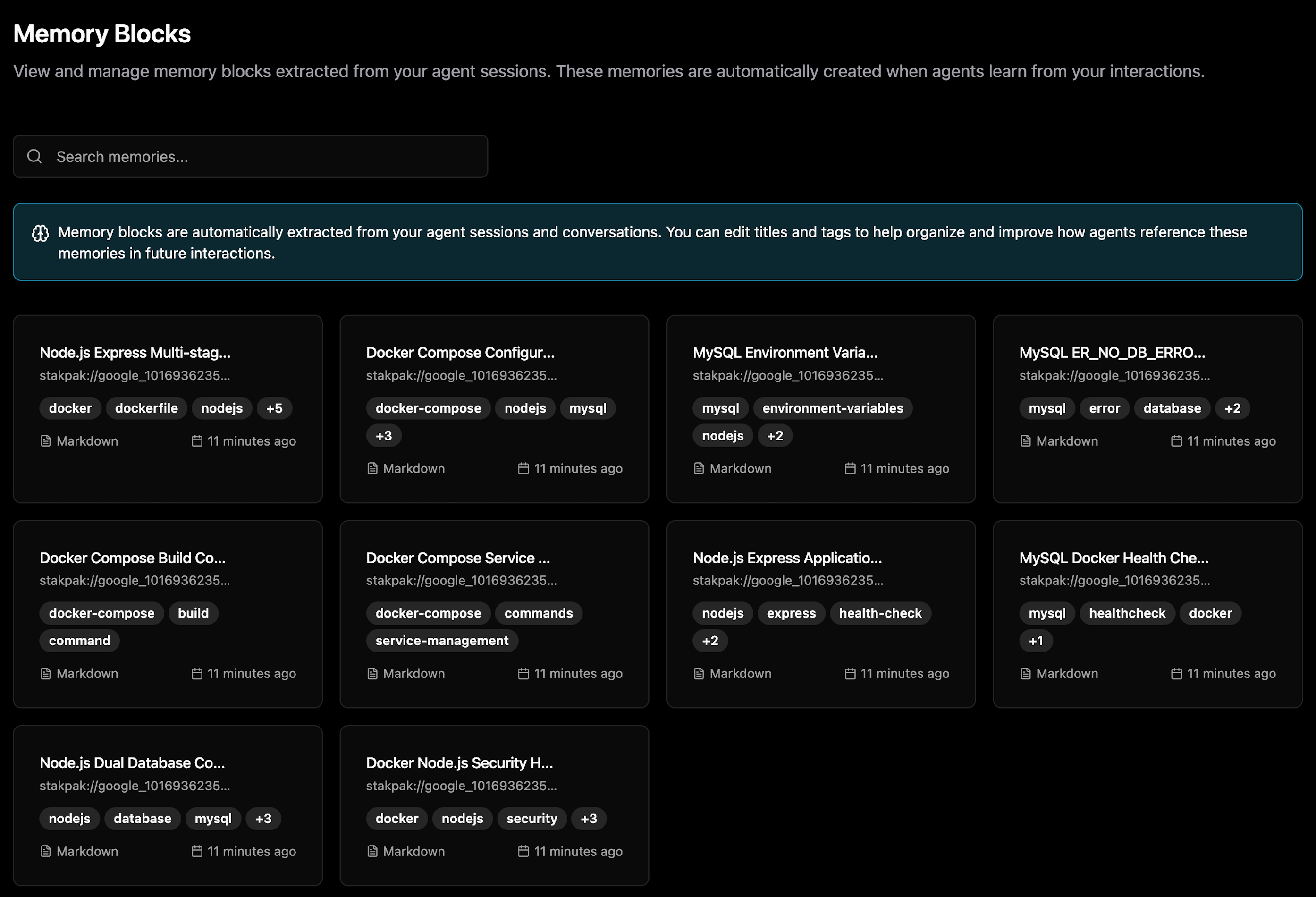

Knows your stack already

Analyzes your existing setup and adapts. No more copy-pasting from Stack Overflow or asking 'how does our infra work again?'

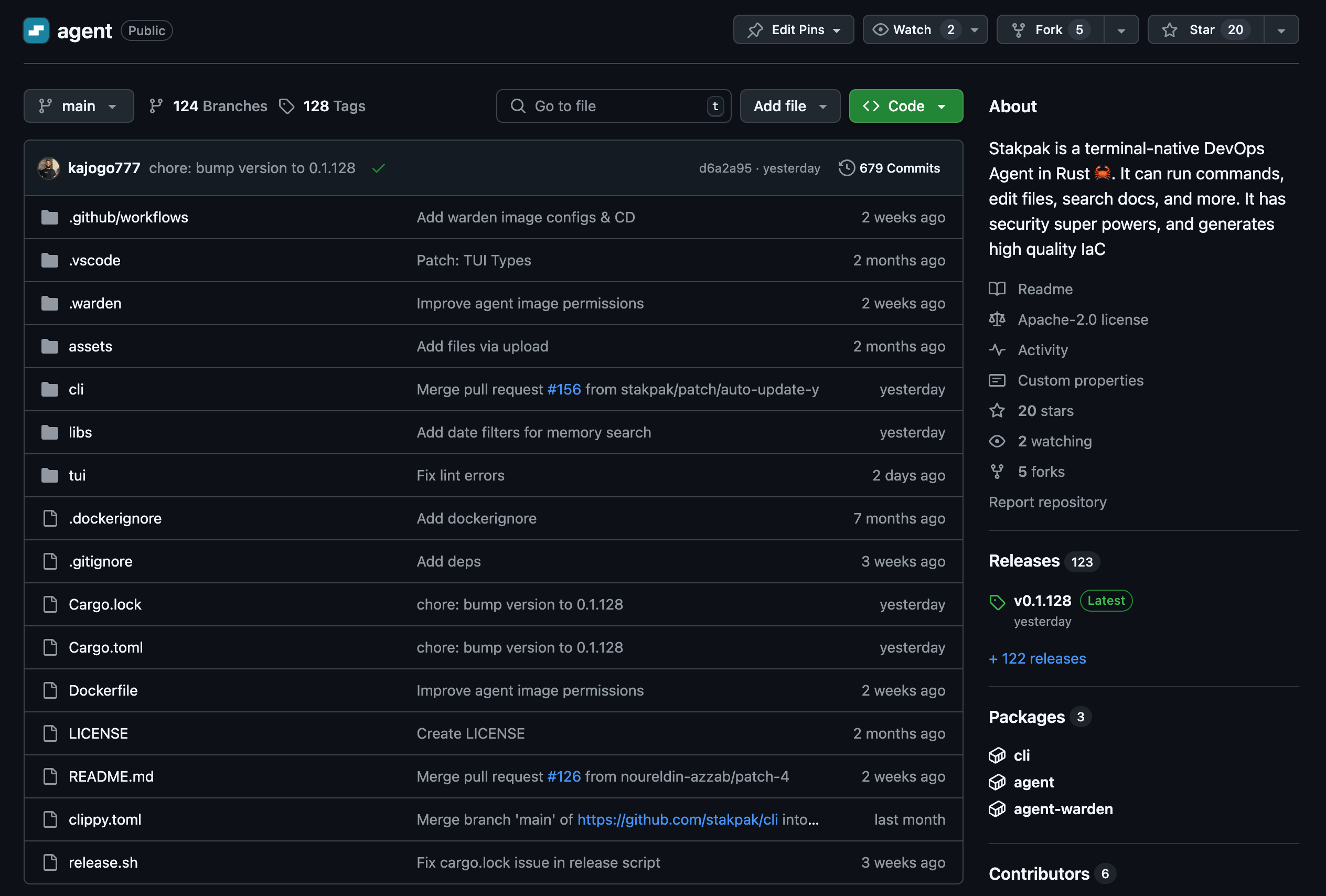

Open-source and in Rust

The Agent code is open-source and built in Rust for portability, reliability, and transparency. Contribute to the project, audit the code, and customize it to fit your needs.

Less Ops More Coding

Want to spend less time on ops and more time coding? Join our newsletter to get the latest tips, tools, and updates on how Stakpak can simplify your workflow and boost your productivity.